Looking to expand the footprint of its toolkit giving developers a unified database software that can work for both relational and post-relational databases, YugaByte has raised $16 million in a new round of funding.

For company co-founder and chief executive Kannan Muthukkaruppan, the new database software liberates developers from the risk of lock-in with any provider of cloud compute as the leading providers at Amazon, Microsoft and Google jockey for the pole position among software developers, and reduces programming complexity.

“YugaByte DB makes it possible for organizations to standardize on a single, distributed database to support a multitude of workloads requiring both SQL and NoSQL capabilities. This speeds up the development of applications while at the same time reduces operational complexity and licensing costs,” said Muthukkaruppan in a statement.

Muthukkaruppan and his fellow co-founders know their way around database software. Alongside Karthik Ranganathan and Mikhail Bautin, Muthukkaruppan built the NoSQL platform that powered Facebook Messenger and its internal time series monitoring system. Before that Ranganathan and Muthukkaruppan had spent time working at Oracle . And after Facebook the two men were integral to the development of Nutanix’s hybrid infrastructure.

“These are tens of petabytes of data handling tens of millions of messages a day,” says Muthukkaruppan.

Ranganathan and Muthukkaruppan left Nutanix in 2016 to begin working on YugaByte’s database software. What’s important, founders and investors stress, is that YugaByte breaks any chains that would bind software developers to a single platform or provider.

While developers can move applications from one cloud provider to another, they have to maintain multiple databases across these systems so that they inter-operate.

“YugaByte’s value proposition is strong for both CIOs, who can avoid cloud vendor lock-in at the database layer, and for developers, who don’t have to re-architect existing applications because of YugaByte’s built-in native compatibility to popular NoSQL and SQL interfaces,” said Deepak Jeevankumar, a managing director at Dell Technologies Capital.

Jeevankumar’s firm co-led the latest $16 million financing for YugaByte alongside previous investor Lightspeed Venture Partners.

What attracted Lightspeed and Dell’s new investment arm was the support the company has from engineers in the trenches, like Ian Andrews, the vice president of products at Pivotal. “YugaByte is going to be interesting to any enterprise requiring an elastic data tier for their cloud-native applications,” Andrews said in a statement. “Even more so if they have a requirement to operate across multiple clouds or in a Kubernetes environment.”

With new software infrastructure, portability is critical, as data needs to move between and among different software architectures.

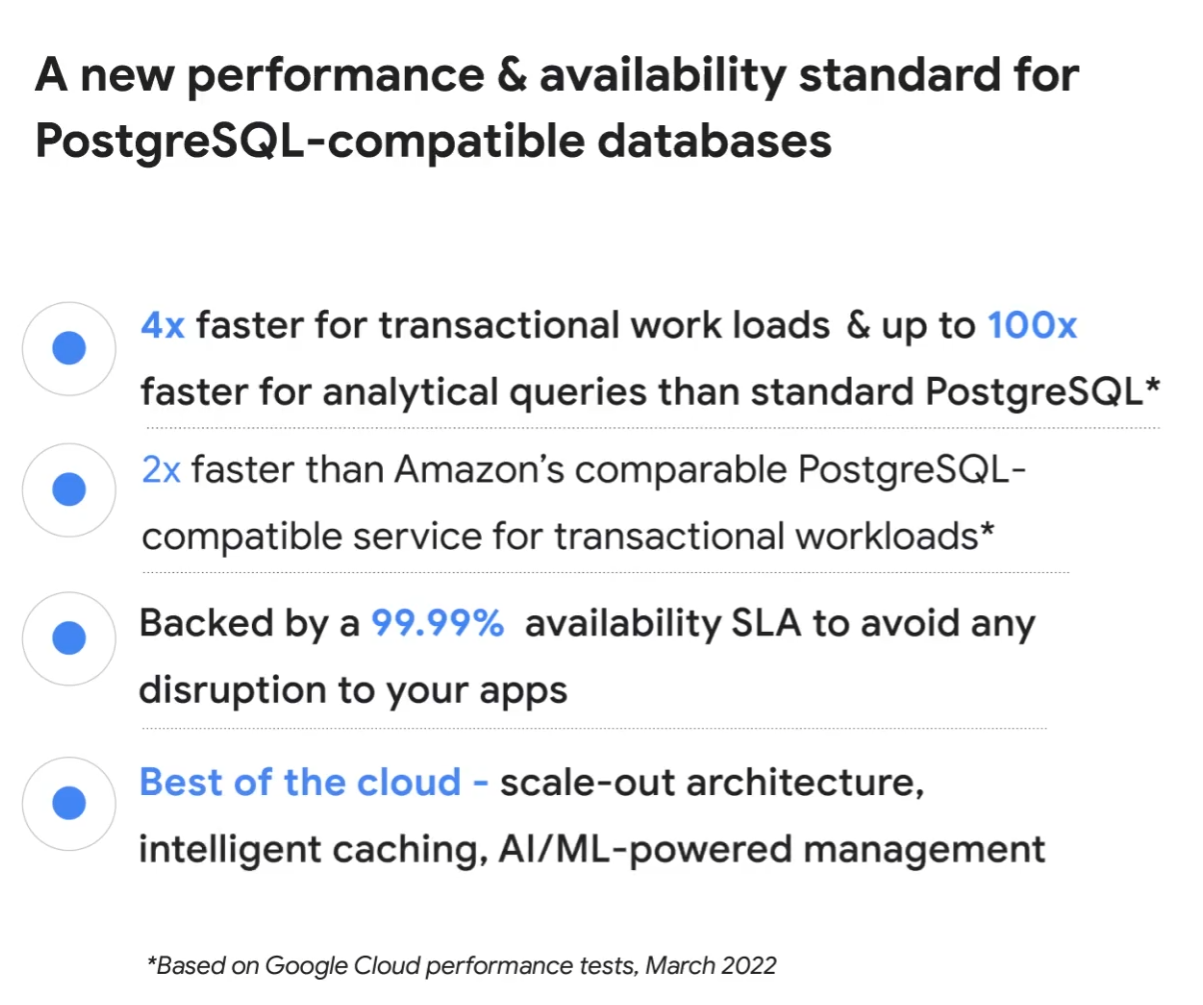

The problem is that traditional databases have a hard time scaling, and new database technologies aren’t incredibly reliable when it comes to data consistency and durability. So developers have been using legacy database software from folks like Oracle and PostgreSQL for their systems of record and then new database software like Microsoft Azure’s CosmosDB, Amazon’s DynamoDB, Apache’s Cassandra (which the fellas used at Facebook) or MongoDB for distributed transactions for applications (things like linear write/read scalability, plus auto-rebalancing, sharding and failover).

With YugaByte, software developers get support for Apache Cassandra and Redis APIs, along with support for PostgreSQL, which the company touts as the best of both the relational and post-relational database worlds.

Now that the company has $16 million more in the bank, it can begin spreading the word about the benefits of its new database software, says Muthukkaruppan.

“With the additional funding we will accelerate investments in engineering, sales and customer success to scale our support for enterprises looking to bring their business-critical data to the cloud,” he said in a statement.